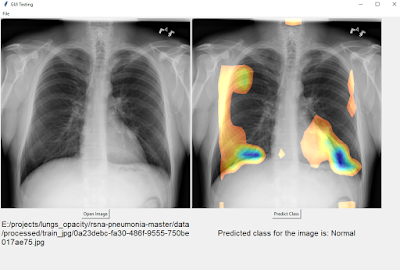

Simple GUI for Model Inferences

Running inferences by loading models via scripts is cool but the end user may not be familiar with these methods of obtaining predictions. If deep learning is to be useful as a tool to the user, then it should be available in the form of a GUI, either web based or desktop based. Keeping that in mind I decided to see what tools are available for me to create a GUI on the desktop that will allow me to: 1) Load a model 2) Feed that model inputs via a file explorer 3) Obtain inference results and display it via labels. Display any additional data as required. Since I was programming on the Windows Desktop, I decided to check out the machine learning offerings available via the Visual C# IDE. I found out that Microsoft's CNTK was available on C# so I decided to give that a go. I already had a pretrained model that I'd used for pneumonia detection (I trained and tested it in my previous post). I now needed a way to use that model in C# via CNTK. Luckily, there ...