Flask, Docker and inferences via a server/cloud

While my Protein Classification model was churning in the background, I focused on learning skills and technologies that would help 'production-ize' ML models for inference. This would mean that I could host my ML models on a server which would mean that a useful model would be available to more people than just me.

I learnt about the Flask framework through which one could expose python functions and have it return some data. In the ML case, this would mean having Flask expose a function where inference is performed and then send the results back to the user.

I also learnt about Docker and how I could build and run an image with the ML model inference being served by an Apache server, with the help of Flask and WSGI (a bridge between Flask and Apache).

Ill update this post when I have something working.

UPDATE 1: I obtained a free $300 credit to play with Google's cloud services and they did have an ML Engine to work with so I decided to give it a shot before I implemented a Flask/Apache/Docker solution. Another advantage is that the gcloud ml engine scales based on requests so getting predictions when a lot of people use it is not going to be an issue.

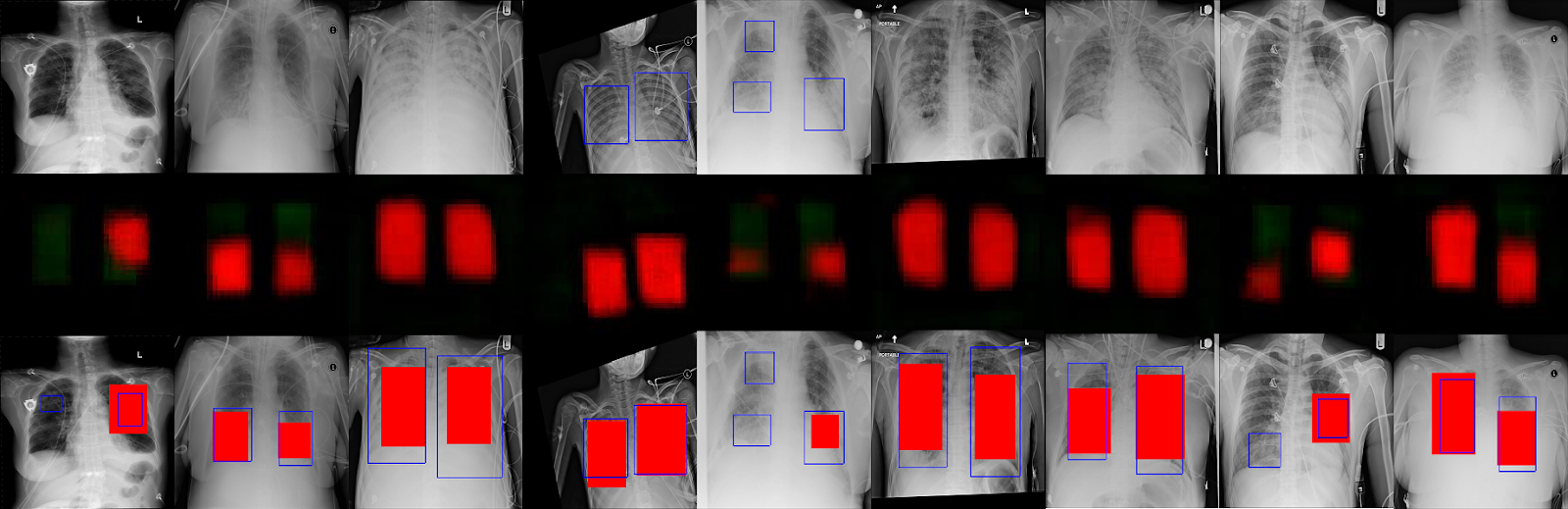

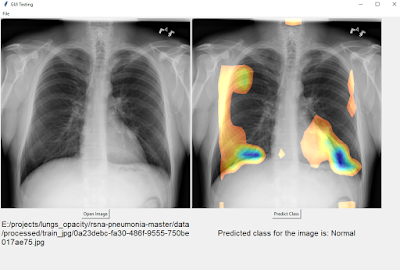

I had a model that I'd previously used for the Pneumonia detection competition but it only had the weights stored within it. To use the ML engine inference/prediction service, I instantiated the model again, loaded the weights and saved the model again, this time using the simple save feature.

https://www.tensorflow.org/api_docs/python/tf/saved_model/simple_save

I used inputs={"input_image": lung_x_input}, outputs={"output_image":hg_output} (these variables are available in my inference script). The saved model function then saves the model in a way that can exclusively be used for inference and in a format compatible with the cloud ml engine.

Once the model was saved, I uploaded it to a storage bucket on gcloud. Then I created an ml model on gcloud and pointed it to the saved model in the bucket. After running some checks, it confirms if the model is compatible with the prediction service. When I first uploaded the model, it gave me an error. After researching that error, I fixed it by making sure that the batch size of the input was set to None for the tf placeholder. After re-saving the model, I followed the above steps again and this time the model was all set to make predictions.

Now one of the ways you send and receive data is via the gcloud api. You can install it and run it via the Windows powershell. The command I used to send data for inference is:

gcloud ml-engine predict --model lungs_test --json-instances ./data/data_61_new.json >>./output/preds_61.txt

In this case I was going to send image data and my model was going to send back image data. If your model was going to accept float32 input, then the json file simply needs to have:

{"input_image": array-data-for-image}

The array data for your image is simply the set of numbers that define the image. Note that the json key input_image and the input name I used while saving the model need to be the same.

Since I was going to receive an image as a result, I needed to save the data that I was going to receive from the service. Using >>./output/preds_61.txt at the end of the command saved that data in a txt file.

To confirm that the model was indeed sending an image prediction, I loaded that txt file via python and did some processing to see the image that was sent back. And indeed it did predict the image as I expected! There's still some post-processing I need to do to get the final output though. I will mostly upload the code for this once I've completed the project in its entirety.

UPDATE 2: I got real busy with other projects but it was good to learn some ML related dev-ops. I will take what I learnt here and apply it to another project in the future.

UPDATE 1: I obtained a free $300 credit to play with Google's cloud services and they did have an ML Engine to work with so I decided to give it a shot before I implemented a Flask/Apache/Docker solution. Another advantage is that the gcloud ml engine scales based on requests so getting predictions when a lot of people use it is not going to be an issue.

I had a model that I'd previously used for the Pneumonia detection competition but it only had the weights stored within it. To use the ML engine inference/prediction service, I instantiated the model again, loaded the weights and saved the model again, this time using the simple save feature.

https://www.tensorflow.org/api_docs/python/tf/saved_model/simple_save

I used inputs={"input_image": lung_x_input}, outputs={"output_image":hg_output} (these variables are available in my inference script). The saved model function then saves the model in a way that can exclusively be used for inference and in a format compatible with the cloud ml engine.

Once the model was saved, I uploaded it to a storage bucket on gcloud. Then I created an ml model on gcloud and pointed it to the saved model in the bucket. After running some checks, it confirms if the model is compatible with the prediction service. When I first uploaded the model, it gave me an error. After researching that error, I fixed it by making sure that the batch size of the input was set to None for the tf placeholder. After re-saving the model, I followed the above steps again and this time the model was all set to make predictions.

Now one of the ways you send and receive data is via the gcloud api. You can install it and run it via the Windows powershell. The command I used to send data for inference is:

gcloud ml-engine predict --model lungs_test --json-instances ./data/data_61_new.json >>./output/preds_61.txt

In this case I was going to send image data and my model was going to send back image data. If your model was going to accept float32 input, then the json file simply needs to have:

{"input_image": array-data-for-image}

The array data for your image is simply the set of numbers that define the image. Note that the json key input_image and the input name I used while saving the model need to be the same.

Since I was going to receive an image as a result, I needed to save the data that I was going to receive from the service. Using >>./output/preds_61.txt at the end of the command saved that data in a txt file.

To confirm that the model was indeed sending an image prediction, I loaded that txt file via python and did some processing to see the image that was sent back. And indeed it did predict the image as I expected! There's still some post-processing I need to do to get the final output though. I will mostly upload the code for this once I've completed the project in its entirety.

UPDATE 2: I got real busy with other projects but it was good to learn some ML related dev-ops. I will take what I learnt here and apply it to another project in the future.

Comments

Post a Comment