Fast Style Transfer Implementation

After finishing up the Deep Learning course from Coursera, I wanted to explore more work that was done in the area of style transfer (Gatys et al), which was an assignment for us in that course.

I firstly wanted to implement the style transfer algorithm outside the confines of the assignment. This was a useful exercise and I learnt some new things like using the vgg network and some associated helper functions. I also learnt how important tuning is and that it is an iterative process.

While the method is cool, it was too slow compared to be deployed as a program/app. There had to be a faster way of getting stylized images.

Turns out that a faster, better method based on training a feedforward network existed so I was interested in seeing how it works. I came across the work of Logan Engstrom here: https://github.com/lengstrom/fast-style-transfer

I looked over the code and implemented it a version of it in Jupyter notebooks. One notebook contains the code for training the network and the other is for generating images based on the trained network.

Some lessons learnt while implementing this code:

1) Using tf.interactivesession was slower than 'using tf.Session() as sess:' I don't know why this was occurring. I was getting around 800s for an epoch compared to around 580s using the latter approach.

2) Also, there was a lot of data transfer going on in the interactivesession approach in the GPU memory and RAM whereas it was at a much lower percentage in the latter approach.

3) In a previous implementation, some of the losses were coming in at infinity. Turns out that the denominator values for the losses exceeded int32 values (which in turn became zero) so I had to cast that to float.

4) I was interested in seeing if there were other methods apart from feed dict to feed the data to the network. I tried the new dataset-iterator approach which was good to learn but it wasn't any faster in my case. It also showed that the data that was being transferred constantly between the RAM and GPU much more than the feed dict method so I went back to it.

5) I tried the approach of working with a smaller dataset of 1000 images first, to tweak the look of the generated image and get some hyper parameters tuned. Turns out that 1000 images weren't enough to capture adequate information about images. As you might have seen in explanation of CNNs, the lower level layers of a well trained network capture edge information and the higher level layers capture larger scale information. And it does this well with larger amounts of data. I wasted way too much time working with the smaller dataset until I finally decided to try training the network on 10000 images. The difference after around 20 epochs was noticeable and I could see the edges of the input image forming and the style getting slowly applied to it.

6) I created the hdf5 file so that the content and the style image data could be loaded to RAM directly. Other scripts have files taken directly from the disk for processing. I wanted the input pipeline to be as fast as possible and I didn't want any data latency for the GPU. Info on how to create an hdf5 file and its usage: http://machinelearninguru.com/deep_learning/data_preparation/hdf5/hdf5.html

Potential Improvements: Training on the full MSCOCO dataset, tweaking the hyperparameters such as the content and style and variation weights. I'd already spent more time than necessary on this project so I had to move on.

The Jupyter notebooks are here: https://github.com/varunvprabhu/fast_style_transfer

Code used: Tensorflow 1.9 for GPU along with the requisite CUDA and CuDNN libraries on a Win10 machine with Ryzen 1600, 16GB RAM and NVIDIA GeForce 1070.

Takes approximately 580s for 1 epoch across 10000 images of the MSCOCO dataset. The network was trained for 30 epochs.

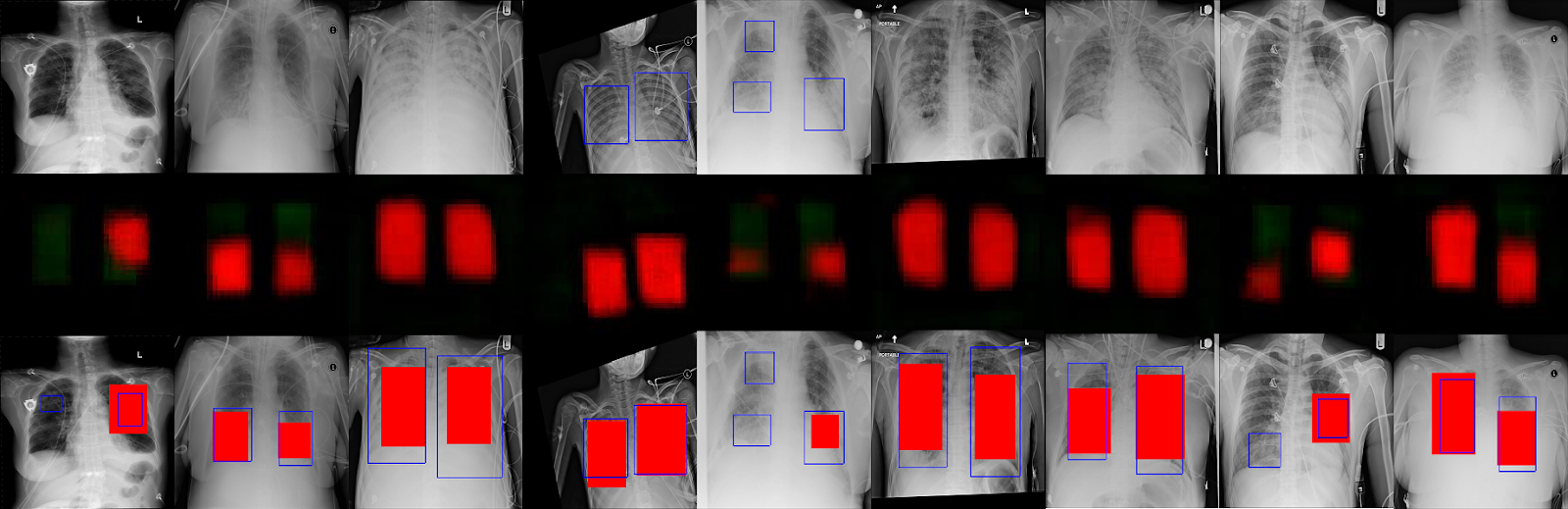

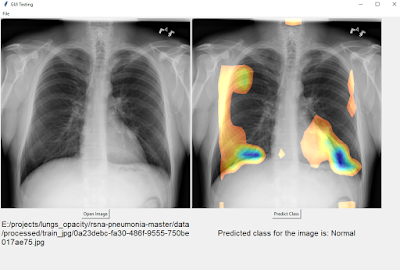

Below are the results from both the feedforward and the slower method.

Results from the feedforward method:

1) The 2 styles selected for feedforward were Starry Night by Vincent Van Gogh and The Great Wave off Kanagawa by Hokusai.

2) An average color seems to be applied to the transformed image from the style image. Starry Night is mostly blue-dark blue whereas the wave is mostly paper yellow.

3) The edges are more pronounced in the images with the wave style. A ripple going upwards from left to right seems quite prominent in the transformed images.

Comments

Post a Comment